This is chapter 3.2 of a multi-part series on writing a RISC-V OS in Rust.

Table of Contents → Chapter 3.1 → (Chapter 3.2) → Chapter 4

Memory Management Unit

Video

View this post on YouTube at: https://www.youtube.com/watch?v=-9sRy5UPWJk.

Overview

From the operating system's point of view, we have a large pool of memory from some starting address to some ending address. We can use our linker script (the virt.lds file) to provide us symbols representing the address of the starting and ending points.

Some of the memory will be used for things, such as global variables and stack space. However, we use the linker script to help us with this moving target. The rest of the untouched memory becomes our "heap" space. However, heap in the OS sense isn't quite what it is in the malloc/free sense.

Paging

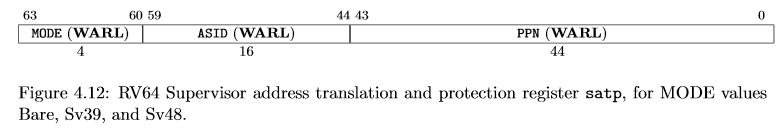

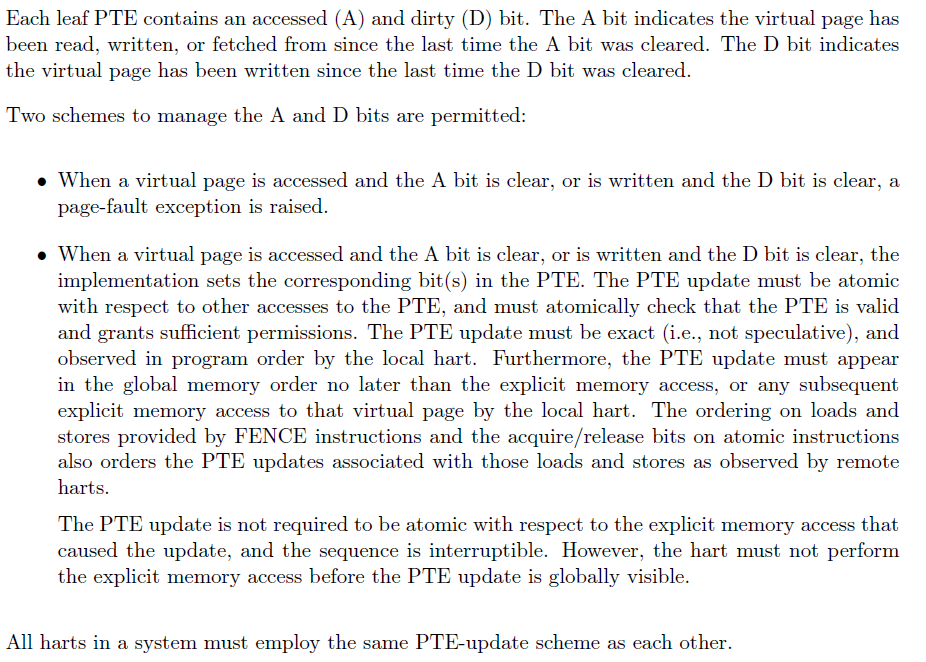

Paging is a system by which a piece of hardware (commonly referred to as the Memory Management Unit or MMU) translates virtual addresses into physical addresses. The translation is performed by reading a privileged register called the SATP or Supervisor Address Translation and Protection register. This register turns the MMU on/off, sets the address space identifier, and sets the physical memory address where the first page table can be found.

We can place these tables anywhere in RAM provided the last 12 bits are 0. This is because the last 12 bits of the page table is not provided in the SATP. In fact, to get the actual memory address, we take 44 bits from the SATP and shift it left by 12 places, which replaces the last 12 bits with 0s. This forms a 56-bit bit address--not quite 64-bits.

There are essentially three fields that we need to be aware of: (1) the virtual address, (2) the physical address, and (3) the page table entry. These are listed in the RISC-V privileged specification chapter 4.4.

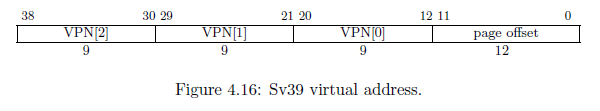

The memory management has different modes. The HiFive Unleashed uses the Sv39 mode, which means the virtual addresses are 39-bits. Virtual addresses in this case cannot explore all 64-bits of memory space. Others are Sv32 for 32-bit virtual addresses and Sv48 for 48-bit virtual addresses.

Virtual Addresses

The Sv39 virtual address contains three 9-bit indices. Since 29 = 512, each table contains exaclty 512 entries, and each entry is exactly 8-bytes (64-bits) as shown below. The Sv39 virtual address contains VPN[x]. The VPN stands for "virtual page number", which is essentially an index into an array of 512, 8 byte entries. So, instead of having the virtual address directly translate into a physical address, the MMU goes through a series of tables. With the Sv39 system, we can have one to three levels. Each level contains a table of 512, 8 byte entries.

Table Entries

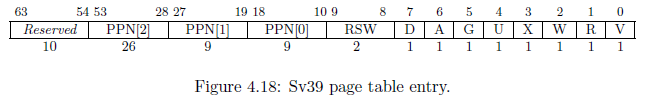

A table entry is written by the operating system to control how the MMU works. We can change how an address is translated or we can set certain bits to protect a page. The page entry is described in the following figure:

Physical Addresses

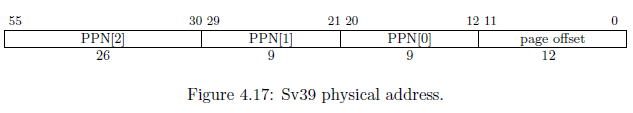

The physical address is actually 56-bits. Therefore, a 39-bit virtual address can translate into a 56-bit physical address. Obviously, this allows us to map the same virtual address to a different physical address--much like how we will map addresses when creating user processes. The physical address is formed by taking PPN[2:0] and placing them into the following format. You will notice that the page offset is directly copied from the virtual address into the physical address.

The SATP Register

All translations begin at the Supervisor Address Translation and Protection (SATP) register shown below and is described in the RISC-V Privileged Specification chapter 4.1.12, which contains the following diagram of the SATP register.

We can see that the PPN (physical page number) is a 44-bit value. However, this value has been shifted right by

12 bits before being stored. This essentially divides the actual number by 4096 (212 = 4,096). Therefore, the

actual address is PPN << 12.

Page Faults

The MMU signals errors in translation to the CPU through three different page faults: (1) instruction, (2) load, and (3) store. An instruction page fault occurs during the instruction fetch phase when the MMU throws a page fault. This can mean that the page entry is not valid or does not have the X (execute) bit set to 1 or some other reason.

Page faults are trapped by the CPU and the mcause or scause register will contain

12 for an instruction page fault, 13 for a load page fault, or 15 for a store page fault. The operating system

can decide what to do then. In most cases, the correct response is to kill the offending process. However, there

are some advanced systems, such as copy-on-write, that are implemented by trapping page faults. In these cases,

a page fault is not a problem, it's a feature.

Translating Virtual Addresses

Take, for example, the virtual address 0x7d_beef_cafe, which is exactly 39 bits. If an address is

longer than 39-bits, the MMU will most likely cause a page fault.

In binary: 0b0111_1101_1011_1110_1110_1111_1100_1010_1111_1110

VPN[2] VPN[1] VPN[0] 12-bit offset

[1_1111_0110] [1_1111_0111] [0_1111_1100] [1010_1111_1110]

502 503 252

The address above will be translated through the following steps:

- Read SATP register and find the top of level 2's page table (

PPN << 12). - Add the offset * size, where offset is

VPN[2] = 0b1_1111_0110 = 502 * 8 = SATP + 4016 - Read this entry.

- If V(valid)=0, page fault

- If this entry's R, W, or X bits are 1, this is a leaf, otherwise it is a branch.

- The PPN[2] | PPN[1] | PPN[0] address shows where in physical memory the next page table is located.

- Repeat at #2 until a leaf is found.

- A leaf means that the PPN[2], PPN[1], and PPN[0] tells the MMU what the physical address actually is.

Every Level Can Be a Leaf

Like most memory management units, the MMU can translate a more coarse-grained page. For example, Intel/AMD x86_64 systems can stop at the PDP, which would give a 1GB resolution, whereas stopping at the PD would give a 2MB resolution. The same can be applied to the RISC-V system. However, RISC-V incorporates a clever way to determine a leaf from a branch.

On Intel/AMD machines, upper levels control the lower levels. If an upper level is read-only, then no branch after that may be writeable. RISC-V knows that this is dumb, so they made it so that if any combination of the R, W, X (read, write, execute) protection bits are set, then the page table entry describes a leaf.

If the page table entry (PTE) is a leaf, then PPN[2:0] describe the physical address. However, if the PTE is a branch, then PPN[2:0] describe where the next page table can be found in physical RAM. One thing to note is that only PPN[2:leaf-level] will be used to develop the physical address. For example, if level 2's (the top level) page table entry is a leaf, then only PPN[2] contributes to the physical address. VPN[1] is copied to PPN[1], VPN[0] is copied to PPN[0], and the page offset is copied, as normal.

Programming the MMU

In Rust, I created three functions that will program the MMU: map, unmap, and virt_to_phys. We need to be able to manually walk the page table because when a user-space application makes a system call, every pointer is a pointer to a virtual memory address--which is not the same as our kernel's. Therefore, we have to drill down to a physical address so that the user-space application and the kernel are talking about the same memory.

I used a couple of structures to make programming a page table entry easier.

pub struct Table {

pub entries: [Entry; 512],

}

impl Table {

pub fn len() -> usize {

512

}

}

The first structure is a Table. This describes a single 4,096 byte table. We get this size by having 512, 8-byte entries. A single entry is described as:

pub struct Entry {

pub entry: i64,

}

impl Entry {

pub fn is_valid(&self) -> bool {

self.get_entry() & EntryBits::Valid.val() != 0

}

// The first bit (bit index #0) is the V bit for

// valid.

pub fn is_invalid(&self) -> bool {

!self.is_valid()

}

// A leaf has one or more RWX bits set

pub fn is_leaf(&self) -> bool {

self.get_entry() & 0xe != 0

}

pub fn is_branch(&self) -> bool {

!self.is_leaf()

}

pub fn set_entry(&mut self, entry: i64) {

self.entry = entry;

}

pub fn get_entry(&self) -> i64 {

self.entry

}

}

Essentially, I aliased the i64 data type as an Entry so that I could add some helper functions to it.

The map function takes a mutable root by-reference, a virtual address, a physical address, the protection bits, and which level this should be mapped to. Typically, we map all pages to level 0, which is the 4KiB level. However, we can specify 2 for a 1GiB page, 1 for a 2MiB page, or 0 for a 4KiB page.

pub fn map(root: &mut Table, vaddr: usize, paddr: usize, bits: i64, level: usize) {

// Make sure that Read, Write, or Execute have been provided

// otherwise, we'll leak memory and always create a page fault.

assert!(bits & 0xe != 0);

// Extract out each VPN from the virtual address

// On the virtual address, each VPN is exactly 9 bits,

// which is why we use the mask 0x1ff = 0b1_1111_1111 (9 bits)

let vpn = [

// VPN[0] = vaddr[20:12]

(vaddr >> 12) & 0x1ff,

// VPN[1] = vaddr[29:21]

(vaddr >> 21) & 0x1ff,

// VPN[2] = vaddr[38:30]

(vaddr >> 30) & 0x1ff,

];

// Just like the virtual address, extract the physical address

// numbers (PPN). However, PPN[2] is different in that it stores

// 26 bits instead of 9. Therefore, we use,

// 0x3ff_ffff = 0b11_1111_1111_1111_1111_1111_1111 (26 bits).

let ppn = [

// PPN[0] = paddr[20:12]

(paddr >> 12) & 0x1ff,

// PPN[1] = paddr[29:21]

(paddr >> 21) & 0x1ff,

// PPN[2] = paddr[55:30]

(paddr >> 30) & 0x3ff_ffff,

];

The first thing we do here is break apart the virtual address and physical address into its components. Notice that we don't care about the page offset--this is because the page offset is never stored. Instead, when the MMU translates a virtual address, it copies the page offset directly into the physical address to form a full 56-bit physical address.

// We will use this as a floating reference so that we can set

// individual entries as we walk the table.

let mut v = &mut root.entries[vpn[2]];

// Now, we're going to traverse the page table and set the bits

// properly. We expect the root to be valid, however we're required to

// create anything beyond the root.

// In Rust, we create a range iterator using the .. operator.

// The .rev() will reverse the iteration since we need to start with

// VPN[2] The .. operator is inclusive on start but exclusive on end.

// So, (0..2) will iterate 0 and 1.

for i in (level..2).rev() {

if !v.is_valid() {

// Allocate a page

let page = zalloc(1);

// The page is already aligned by 4,096, so store it

// directly The page is stored in the entry shifted

// right by 2 places.

v.set_entry(

(page as i64 >> 2)

| EntryBits::Valid.val(),

);

}

let entry = ((v.get_entry() & !0x3ff) << 2) as *mut Entry;

v = unsafe { entry.add(vpn[i]).as_mut().unwrap() };

}

// When we get here, we should be at VPN[0] and v should be pointing to

// our entry.

// The entry structure is Figure 4.18 in the RISC-V Privileged

// Specification

let entry = (ppn[2] << 28) as i64 | // PPN[2] = [53:28]

(ppn[1] << 19) as i64 | // PPN[1] = [27:19]

(ppn[0] << 10) as i64 | // PPN[0] = [18:10]

bits | // Specified bits, such as User, Read, Write, etc

EntryBits::Valid.val(); // Valid bit

// Set the entry. V should be set to the correct pointer by the loop

// above.

v.set_entry(entry);

As you can see with the code above, we allocate a new page using zalloc. Luckily, each page table in RISC-V is exactly 4,096 bytes (512 entries, each 8 bytes), which is the exact size we allocate using zalloc.

One of the challenges for my students is that if you take a look the PPN[2:0] are the same in the PTE and physical address, except they're in different locations. For some reason the PPN[2:0] is shifted to the right by two places, and the physical address starts at bit 10. With the physical address, the physical address starts at bit 12. However, using the approach I took above, where I encode PPN by themselves, we should be safe.

Another interesting aspect about what I've done above is that I always encode ppn[2:0] no matter what level we're at. This is fine for the page table entry, but it might lead to confusion. Remember, if we stop at level 1, then PPN[0] is NOT copied from the PTE. Instead, PPN[0] is copied from VPN[0]. This is performed by the hardware MMU. In the end, we're just adding more information than might be necessary for a page table entry.

Freeing MMU Tables

We will be allocating at least one page per user-space application. However, I plan on only allowing 4KiB pages for user-space applications. Therefore, we need at least three page tables for a single address. This means that we can quickly run out of memory if we don't reuse these pages. To free memory, we will use unmap.

pub fn unmap(root: &mut Table) {

// Start with level 2

for lv2 in 0..Table::len() {

let ref entry_lv2 = root.entries[lv2];

if entry_lv2.is_valid() && entry_lv2.is_branch() {

// This is a valid entry, so drill down and free.

let memaddr_lv1 = (entry_lv2.get_entry() & !0x3ff) << 2;

let table_lv1 = unsafe {

// Make table_lv1 a mutable reference instead of a pointer.

(memaddr_lv1 as *mut Table).as_mut().unwrap()

};

for lv1 in 0..Table::len() {

let ref entry_lv1 = table_lv1.entries[lv1];

if entry_lv1.is_valid() && entry_lv1.is_branch()

{

let memaddr_lv0 = (entry_lv1.get_entry()

& !0x3ff) << 2;

// The next level is level 0, which

// cannot have branches, therefore,

// we free here.

dealloc(memaddr_lv0 as *mut u8);

}

}

dealloc(memaddr_lv1 as *mut u8);

}

}

}

Just like with the map function, this function assumes a legitimate root table (level 2 table). We send it to the unmap function as a mutable reference. So, the logic behind this code is that we start at the lowest level (level 0) and free our way back to the first level (level 2). The code above is basically an iterative approach to a recursive function. Notice that I do not deallocate the root itself. Since we're given a reference of a generic Table structure, we could pass a level 1 table just to free portions of a larger table.

Walking The Page Tables

Finally, we need to manually walk the page table. This will be used to copy data from a virtual memory address. Since the virtual memory addresses are different between the kernel and user processes, we need to talk in physical addresses. The only way to do this is to always convert virtual memory addresses into their physical address equivalent. Unlike the hardware MMU, we can pass any table to this function regardless of whether that table is currently employed by the MMU.

pub fn virt_to_phys(root: &Table, vaddr: usize) -> Option {

// Walk the page table pointed to by root

let vpn = [

// VPN[0] = vaddr[20:12]

(vaddr >> 12) & 0x1ff,

// VPN[1] = vaddr[29:21]

(vaddr >> 21) & 0x1ff,

// VPN[2] = vaddr[38:30]

(vaddr >> 30) & 0x1ff,

];

let mut v = &root.entries[vpn[2]];

for i in (0..=2).rev() {

if v.is_invalid() {

// This is an invalid entry, page fault.

break;

}

else if v.is_leaf() {

// According to RISC-V, a leaf can be at any level.

// The offset mask masks off the PPN. Each PPN is 9

// bits and they start at bit #12. So, our formula

// 12 + i * 9

let off_mask = (1 << (12 + i * 9)) - 1;

let vaddr_pgoff = vaddr & off_mask;

let addr = ((v.get_entry() << 2) as usize) & !off_mask;

return Some(addr | vaddr_pgoff);

}

// Set v to the next entry which is pointed to by this

// entry. However, the address was shifted right by 2 places

// when stored in the page table entry, so we shift it left

// to get it back into place.

let entry = ((v.get_entry() & !0x3ff) << 2) as *const Entry;

// We do i - 1 here, however we should get None or Some() above

// before we do 0 - 1 = -1.

v = unsafe { entry.add(vpn[i - 1]).as_ref().unwrap() };

}

// If we get here, we've exhausted all valid tables and haven't

// found a leaf.

None

}

As you can see with the Rust function above, we are given a constant reference to a page table. We return an Option, which is an enumeration with either

None, which we will use to signal a page fault, or Some(), which we will use to return the physical address.

There are several reasons to page fault using the RISC-V memory management unit. One is to encounter an invalid page table entry (V bit is 0). Another is to have a branch at level 0. Since level 0 is the lowest level, a page fault occurs if we signal there is an even lower level (I guess -1??).

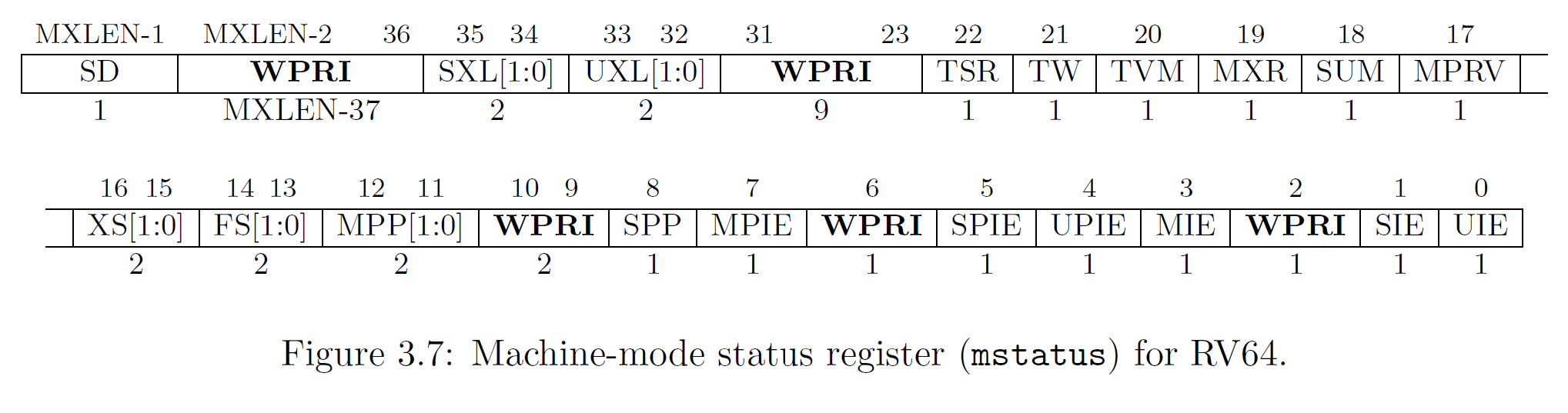

The A and D Bits

If you read the RISC-V privileged spec, the D (dirty) and A (accessed) bits can have two different meanings.

The more traditional meaning for application processors is that the CPU will set the A bit to 1 any time that memory is read from or written to, and the CPU will set the D bit to 1 any time that memory is written to. However, the RISC-V specification also allows the hardware to essentially use these as redundant R and W bits--at least that's my understanding. In other words, we as the kernel programmer control the A and D bits instead of the hardware.

The HiFive Unleashed board uses the latter and will throw a page fault if the D bit (for a store) or A bit (for a load) are 0 on a page table entry. So, let's examine these individually:

- the MMU will throw a page fault if you attempt to write a page whose D bit is 0, much like if the W bit is 0.

- the MMU will throw a page fault if you attempt to read a page whose A bit is 0, much like if the R bit is 0.

In short, the two options are (a) software control or (b) hardware control.

Mapping the Kernel

We are going to switch to Supervisor mode, which allows us to use the MMU for kernel memory. However, we first need to map everything that we need. In RISC-V, the machine mode uses physical memory addresses. However, if we switch the MMU on (set MODE field to 8), then we can use the MMU in supervisor or user mode.

To do this, we are going to identity map (virtual address = physical address) everything we need in the kernel, to include the program code, global sections, UART MMIO address, and so forth. First, I wrote a function in Rust that will help me identity map a range of addresses.

pub fn id_map_range(root: &mut page::Table,

start: usize,

end: usize,

bits: i64)

{

let mut memaddr = start & !(page::PAGE_SIZE - 1);

let num_kb_pages = (page::align_val(end, 12)

- memaddr)

/ page::PAGE_SIZE;

// I named this num_kb_pages for future expansion when

// I decide to allow for GiB (2^30) and 2MiB (2^21) page

// sizes. However, the overlapping memory regions are causing

// nightmares.

for _ in 0..num_kb_pages {

page::map(root, memaddr, memaddr, bits, 0);

memaddr += 1 << 12;

}

}

As you can see, this function calls our page::map function, which maps a virtual address to a physical address. We are given the root table as a mutable reference, which we can pass directly to the map function.

Now, we need to use this function to map our program code and all MMIOs we will need for device drivers, later.

page::init();

kmem::init();

// Map heap allocations

let root_ptr = kmem::get_page_table();

let root_u = root_ptr as usize;

let mut root = unsafe { root_ptr.as_mut().unwrap() };

let kheap_head = kmem::get_head() as usize;

let total_pages = kmem::get_num_allocations();

println!();

println!();

unsafe {

println!("TEXT: 0x{:x} -> 0x{:x}", TEXT_START, TEXT_END);

println!("RODATA: 0x{:x} -> 0x{:x}", RODATA_START, RODATA_END);

println!("DATA: 0x{:x} -> 0x{:x}", DATA_START, DATA_END);

println!("BSS: 0x{:x} -> 0x{:x}", BSS_START, BSS_END);

println!("STACK: 0x{:x} -> 0x{:x}", KERNEL_STACK_START, KERNEL_STACK_END);

println!("HEAP: 0x{:x} -> 0x{:x}", kheap_head, kheap_head + total_pages * 4096);

}

id_map_range(

&mut root,

kheap_head,

kheap_head + total_pages * 4096,

page::EntryBits::ReadWrite.val(),

);

unsafe {

// Map heap descriptors

let num_pages = HEAP_SIZE / page::PAGE_SIZE;

id_map_range(&mut root,

HEAP_START,

HEAP_START + num_pages,

page::EntryBits::ReadWrite.val()

);

// Map executable section

id_map_range(

&mut root,

TEXT_START,

TEXT_END,

page::EntryBits::ReadExecute.val(),

);

// Map rodata section

// We put the ROdata section into the text section, so they can

// potentially overlap however, we only care that it's read

// only.

id_map_range(

&mut root,

RODATA_START,

RODATA_END,

page::EntryBits::ReadExecute.val(),

);

// Map data section

id_map_range(

&mut root,

DATA_START,

DATA_END,

page::EntryBits::ReadWrite.val(),

);

// Map bss section

id_map_range(

&mut root,

BSS_START,

BSS_END,

page::EntryBits::ReadWrite.val(),

);

// Map kernel stack

id_map_range(

&mut root,

KERNEL_STACK_START,

KERNEL_STACK_END,

page::EntryBits::ReadWrite.val(),

);

}

// UART

page::map(

&mut root,

0x1000_0000,

0x1000_0000,

page::EntryBits::ReadWrite.val(),

0

);

This a lot of code, which is why I wrote id_map_range. In this code, we first initialize the page

allocation system we created back in chapter 3.1. Then, we allocate a root table, which takes exactly 1 page (4096 bytes). The

point of type casting this memory address back and forth is because we will eventually need to write a physical address

into the SATP (Supervisor Address Translation and Protection) register to get the MMU going.

Now that we have the root table, we need to stick this into the PPN field of the SATP register. The PPN

is essentially the upper 44 bits of a 56-bit physical address of the table. What we do is shift the root table's address to

the right by 12 place, which essentially divides the address by a page size.

let root_ppn = root_u >> 12;

let satp_val = 8 << 60 | root_ppn;

unsafe {

asm!("csrw satp, $0" :: "r"(satp_val));

}

The code above uses the asm! macro, which allows us to write raw assembly using Rust. In here, we use csrw, which means "Control and Status Register - Write". The 8 << 60 puts the value 8 in the MODE field of the SATP register, which is the Sv39 scheme.

Switching The MMU On

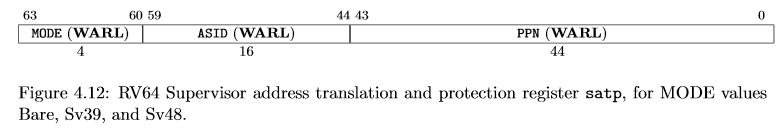

Just because we wrote 8 in the MMU mode, we don't quite have the MMU turned on. The reason is because we're in CPU mode #3, which is the machine mode. So, we need to switch to "supervisor" mode, which is mode #1. We do this by writing the binary number 01 in the MPP (Machine Previous Privilege) field in the mstatus register (bits 11 and 12).

We need to be careful switching the SATP register since the table is cached. The more time we switch this, we need to flush: out with the old, in with the new. When user processes start rolling in and out of context, we will synchronize the page tables. If you're interested now, see the RISC-V privileged specification, chapter 4.2.1, however, we'll be covering that in detail when we start running processes.

li t0, (1 << 11) | (1 << 5)

csrw mstatus, t0

la t1, kmain

csrw mepc, t1

mret

What we do above is enable interrupts and put the value 1 starting at bit 11 (MPP=01). When we execute mret,

we go to a Rust function called kmain. We are now in supervisor mode with the MMU fully turned on.

We have not handled any page faults, yet, so be careful! If you go outside of your address space, your kernel will hang.

Table of Contents → Chapter 3.1 → (Chapter 3.2) → Chapter 4