This is chapter 3.1 of a multi-part series on writing a RISC-V OS in Rust.

Table of Contents → Chapter 2 → (Chapter 3.1) → Chapter 3.2

Page-grained Memory Allocation

Video

The video for this post is at: https://www.youtube.com/watch?v=SZ6GMxLkbx0.

Overview

When we boot, everything inside of the .elf file is automatically loaded into memory. When looking at the code, we allocated 128 megabytes (128 million bytes)

to the entire system. Therefore, we are left with what remains from all of the global variables we've declared and all of the code itself.

When managing memory, we have to manage essentially three parts: 1) page-grained allocations, 2) byte-grained allocations, and 3) programming the memory management unit.

Page-grained Allocations

Page-grained allocations means that the amount of memory one allocation provides is a page. In most architectures, the smallest page size is 4,096 bytes. RISC-V is no different. We will be using the SV39 MMU paging system, which we will discuss in more detail in part #3.

There are several ways we can allocate pages at a time. Since we know the size of every allocation, it makes this fairly straight forward. Many OSes use a linked-list where a pointer to the head of the list is the first unallocated page. Then each unallocated page uses 8 bytes to store a next pointer. That next pointer will be the memory address of the next page. Since these bytes are stored in the page themselves, it doesn't require an overhead of memory.

The drawback of this system is that we must keep track of every page allocated since one pointer is one page.

struct FreePages {

struct FreePages *next;

};

Descriptor Allocations

Our operating system will be using descriptors. We can allocate contiguous pages and only store the head of the pointer. However, to do this, we require 1 byte per 4096 page. This one byte will contain two flags: 1) is this page taken? and 2) is this the last page of a contiguous allocation?

// These are the page flags, we represent this

// as a u8, since the Page stores this flag.

#[repr(u8)]

pub enum PageBits {

Empty = 0,

Taken = 1 << 0,

Last = 1 << 1,

}

pub struct Page {

flags: u8,

}

In here, we need to allocate one Page structure for each page. Luckily, with the updated linker script, we have the heap address and the size of it:

/*

Finally, our heap starts right after the kernel stack. This heap will be used mainly

to dole out memory for user-space applications. However, in some circumstances, it will

be used for kernel memory as well.

We don't align here because we let the kernel determine how it wants to do this.

*/

PROVIDE(_heap_start = _stack_end);

PROVIDE(_heap_size = _memory_end - _heap_start);

We can calculate the number of pages total in the heap by simple arithmetic: let num_pages = HEAP_SIZE / PAGE_SIZE;, where HEAP_SIZE is a global symbol

with the value of _heap_size and page size is a constant for 4,096. Once again, we have to allocate num_pages number of Page

descriptors to store allocation information.

Allocating

Allocating is done by first searching the descriptors for a block of contiguous, free pages. We are given the number of allocations that we want as a parameter to

alloc. In here, if we find a free slice of pages, we set all of those Pages' descriptors to taken. Then, the last page gets the "Last" bit.

This will help when we go to free an allocation of Pages by keeping track where the last page is located.

pub fn alloc(pages: usize) -> *mut u8 {

// We have to find a contiguous allocation of pages

assert!(pages > 0);

unsafe {

// We create a Page structure for each page on the heap. We

// actually might have more since HEAP_SIZE moves and so does

// the size of our structure, but we'll only waste a few bytes.

let num_pages = HEAP_SIZE / PAGE_SIZE;

let ptr = HEAP_START as *mut Page;

for i in 0..num_pages - pages {

let mut found = false;

// Check to see if this Page is free. If so, we have our

// first candidate memory address.

if (*ptr.add(i)).is_free() {

// It was FREE! Yay!

found = true;

for j in i..i + pages {

// Now check to see if we have a

// contiguous allocation for all of the

// request pages. If not, we should

// check somewhere else.

if (*ptr.add(j)).is_taken() {

found = false;

break;

}

}

}

// ........................................

Simple math can tell us which descriptor points to which page since the first descriptor is the first page, the second descriptor is the second page, ..., the nth descriptor

is the nth page. This 1-to-1 relationship makes the math simply: ALLOC_START + PAGE_SIZE * i. In this formula, ALLOC_START is where the first

usable page exists, PAGE_SIZE is the constant 4,096, and i is the ith page descriptor (starting with 0).

Freeing an Allocation

First, we have to reverse the equation above to calculate which descriptor we're talking about. We can use: HEAP_START + (ptr - ALLOC_START) / PAGE_SIZE. We

are given a pointer to the useable page ptr. We subtract the top of the useable heap from the pointer to give an absolute location to this ptr. Since each allocation

is exactly 4,096 bytes, we divide the page size to get the index. Then, we add the HEAP_START variable which is where the descriptors are located.

pub fn dealloc(ptr: *mut u8) {

// Make sure we don't try to free a null pointer.

assert!(!ptr.is_null());

unsafe {

let addr =

HEAP_START + (ptr as usize - ALLOC_START) / PAGE_SIZE;

// Make sure that the address makes sense. The address we

// calculate here is the page structure, not the HEAP address!

assert!(addr >= HEAP_START && addr < HEAP_START + HEAP_SIZE);

let mut p = addr as *mut Page;

// Keep clearing pages until we hit the last page.

while (*p).is_taken() && !(*p).is_last() {

(*p).clear();

p = p.add(1);

}

// If the following assertion fails, it is most likely

// caused by a double-free.

assert!(

(*p).is_last() == true,

"Possible double-free detected! (Not taken found \

before last)"

);

// If we get here, we've taken care of all previous pages and

// we are on the last page.

(*p).clear();

}

}

In this code, we can see that we keep freeing until we hit the last allocation. If we hit a non-taken page before hitting the last allocation, then our heap is messed up. Typically,

this can happen when a pointer is freed more than once (commonly called a double free). In this case, we can add an assert! statement to help catch these types

of problems, but we have to use the term "possible", since we cannot be sure why the last page wasn't signaled before a non-taken page was found.

Zero Allocation

These 4,096-byte pages will be allocated for kernel use and user-application use. Notice, when we free a page, we don't clear the memory, instead, we just clear the descriptor. Above,

this appears as (*p).clear(). This code only clears the descriptor's bits, not the 4,096-bytes themselves.

For security purposes, we will create a helper function that allocates pages and then clears those pages:

/// Allocate and zero a page or multiple pages

/// pages: the number of pages to allocate

/// Each page is PAGE_SIZE which is calculated as 1 << PAGE_ORDER

/// On RISC-V, this typically will be 4,096 bytes.

pub fn zalloc(pages: usize) -> *mut u8 {

// Allocate and zero a page.

// First, let's get the allocation

let ret = alloc(pages);

if !ret.is_null() {

let size = (PAGE_SIZE * pages) / 8;

let big_ptr = ret as *mut u64;

for i in 0..size {

// We use big_ptr so that we can force an

// sd (store doubleword) instruction rather than

// the sb. This means 8x fewer stores than before.

// Typically we have to be concerned about remaining

// bytes, but fortunately 4096 % 8 = 0, so we

// won't have any remaining bytes.

unsafe {

(*big_ptr.add(i)) = 0;

}

}

}

}

We simply call alloc to do the heavy lifting, allocating the page descriptors and setting the bits. Then, we get to the second half of zalloc, which

is to clear it out to 0. We got lucky because we can use 8-byte pointers to store 8-byte 0s all at once, since 4096 / 8 = 512 and 512 is a whole number. Therefore,

8 byte pointers will cover a 4,096-byte page with only 512 loops.

Testing

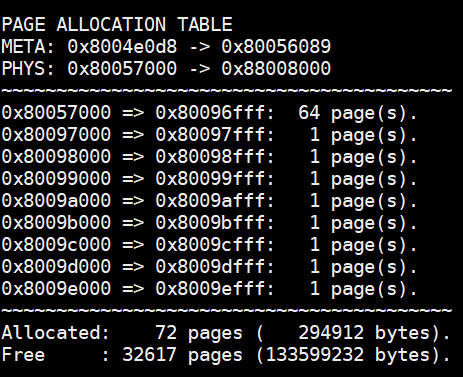

I created a function that prints out the page allocation table by looking at all taken descriptors:

/// Print all page allocations

/// This is mainly used for debugging.

pub fn print_page_allocations() {

unsafe {

let num_pages = HEAP_SIZE / PAGE_SIZE;

let mut beg = HEAP_START as *const Page;

let end = beg.add(num_pages);

let alloc_beg = ALLOC_START;

let alloc_end = ALLOC_START + num_pages * PAGE_SIZE;

println!();

println!(

"PAGE ALLOCATION TABLE\nMETA: {:p} -> {:p}\nPHYS: \

0x{:x} -> 0x{:x}",

beg, end, alloc_beg, alloc_end

);

println!("~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~");

let mut num = 0;

while beg < end {

if (*beg).is_taken() {

let start = beg as usize;

let memaddr = ALLOC_START

+ (start - HEAP_START)

* PAGE_SIZE;

print!("0x{:x} => ", memaddr);

loop {

num += 1;

if (*beg).is_last() {

let end = beg as usize;

let memaddr = ALLOC_START

+ (end

- HEAP_START)

* PAGE_SIZE

+ PAGE_SIZE - 1;

print!(

"0x{:x}: {:>3} page(s)",

memaddr,

(end - start + 1)

);

println!(".");

break;

}

beg = beg.add(1);

}

}

beg = beg.add(1);

}

println!("~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~");

println!(

"Allocated: {:>6} pages ({:>10} bytes).",

num,

num * PAGE_SIZE

);

println!(

"Free : {:>6} pages ({:>10} bytes).",

num_pages - num,

(num_pages - num) * PAGE_SIZE

);

println!();

}

}

Then, start allocating some pages! I've allocated some single pages and a larger 64 pages. You will see the following when printed:

Future

A page-grained allocator wastes a lot of memory for smaller data structures. For example, a linked-list that stores an integer would required 4 bytes + 8 bytes = 12 bytes of memory:

4 for the integer, and 8 for the next pointer. However, we'd have to dynamically allocate one full page. Therefore, we can possibly waste 4,096 - 12 = 4,084 bytes. So, what we can

do is manage each page allocation. This byte-grained allocator will be much like how malloc is implemented in C++.

When we start allocating user-space processes, we will need to protect the kernel memory from errant writes from the user's application. Therefore, we will be using the memory management unit. Particularly, we will be using the Sv39 scheme, which is documented in chapter 4.4 of the RISC-V Privileged Specification.

This chapter will follow two more parts: part 2) the memory management unit (MMU) and part 3) the byte-grained memory allocator for the alloc crate in Rust, which

contains data structures, such as a linked-list and binary tree. We can also use this crate to store and create Strings.

Table of Contents → Chapter 2 → (Chapter 3.1) → Chapter 3.2